The Rising Identity Threat in Biometrics: Are Your Systems Ready for Deepfakes?

The Rising Identity Threat in Biometrics: Are Your Systems Ready for Deepfakes?

Part 1: Introduction

The rapid advancement of artificial intelligence has transformed the landscape of digital security. What began as a tool for innovation has also become a vehicle for deception. Through deepfakes and synthetic identity manipulation, bad actors can now forge hyper-realistic digital replicas capable of passing as real individuals. These sophisticated forms of fraud are testing the limits of every biometric system—from facial recognition to voice verification—and are exposing vulnerabilities that organisations can no longer afford to ignore.

Once a prototype with numerous flaws, artificial intelligence has grown leaps and bounds, now having the ability to imitate human traits into the hands of anyone with computing power. As a result, traditional biometric door access system frameworks are being challenged in ways never anticipated. Synthetic media, forged fingerprints, and cloned voices are capable of bypassing authentication protocols that once defined security excellence.

This evolution marks a new era in identity threats. The challenge is no longer limited to stolen passwords or duplicated access cards. It now extends to digital identities that appear completely genuine but are entirely fabricated. These fraudulent constructs are not just undermining personal privacy but also threatening enterprise networks, financial systems, and border control operations.

To stay ahead, security decision-makers must understand how these new identity threats operate and how they compromise biometric defences. The goal is not simply to react, but to build resilient systems that adapt to the nature of deception itself.

Key Takeaways

- Artificial intelligence has enabled deepfakes and synthetic identities to bypass conventional biometric verification, exposing organisations to complex and evolving risks.

- The next generation of biometric protection relies on deepfake detection, generative adversarial networks (GANs) analysis, and synthetic data training models that enhance real-time liveness verification.

- Future security will depend on adaptive, AI-enhanced authentication, combining face recognition door access system technology, fingerprint scanner door access system reliability, and behavioural biometrics to counter next-generation identity threats.

Part 2: The Evolution of Fraud and Threats

2.1 Understanding Deepfakes and Synthetic Identity Fraud

The digital forgeries shaping modern identity threats are products of sophisticated artificial intelligence. Deepfakes and synthetic identities replicate the subtle intricacies of human appearance and behaviour with extraordinary precision. Their intent is often malicious: to deceive verification frameworks and infiltrate protected systems.

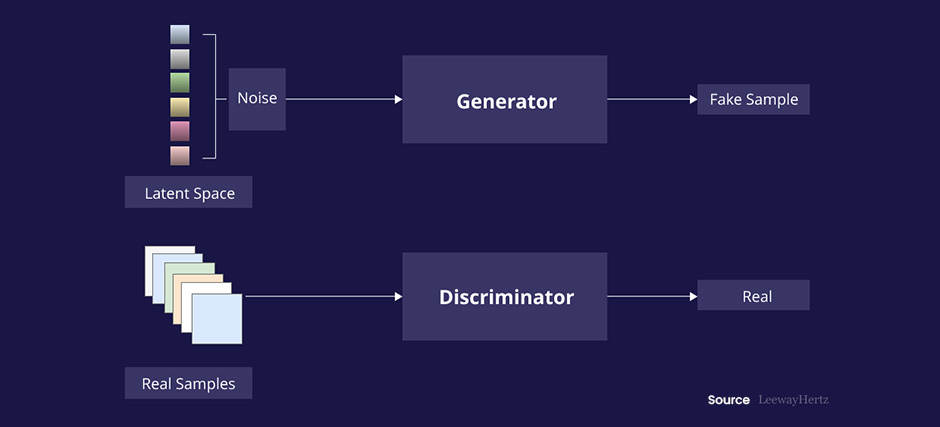

At the heart of this deception lies the use of GANs—machine learning models designed to generate realistic content from synthetic sources. In this process, two neural networks compete: one creates forged data while the other attempts to detect it. Over repeated cycles, the model becomes exceptionally proficient at fabricating data that appears authentic to both human observers and automated systems.

A deepfake is a synthetic video, image, or audio file that convincingly imitates a real person. A synthetic identity, on the other hand, is a fully fabricated persona, constructed from fragments of real and artificial information. Both can be used to exploit the vulnerabilities of a door access system, online verification process, or financial network.

These forgeries can easily replicate a person’s face, fingerprint, or voice pattern to deceive even a well-calibrated biometric door access system. The danger lies in their realism; an attacker could generate a synthetic fingerprint or facial image that passes as genuine without human oversight. As a result, identity threats have evolved beyond static data breaches into a complex arena of digital impersonation.

Fraudsters use these methods to:

- Access restricted facilities protected by biometric setups, such as a fingerprint door access system.

- Bypass identity verification processes in remote or mobile authentication platforms.

- Launch large-scale scams by impersonating corporate executives or authorised users.

The implications are profound. Traditional validation mechanisms, built for predictable risks, were never designed to detect synthetic data imitating real biometrics. They operate on the assumption that the input presented, be it a fingerprint or face scan, originates from a living individual. Today, that assumption can no longer hold.

To maintain integrity, organisations must evolve their defences. Modern biometric systems now incorporate artificial intelligence not just to identify, but to interpret. They analyse depth, texture, and context to differentiate a living subject from a fabricated one. Without this, identity threats will continue to outpace security innovation.

2.2 How Deepfakes Challenge Traditional Biometric Systems

The foundation of biometric authentication lies in the uniqueness of human traits. Fingerprint ridges, facial geometry, and vocal frequencies were once considered impossible to counterfeit. Yet, with modern AI capabilities, the boundaries of imitation have all but dissolved.

Traditional biometric systems often rely on static or two-dimensional data, which can be easily manipulated by deepfake tools. For instance, a facial recognition platform may only scan surface features, while an attacker presents a high-resolution synthetic face generated through GANs. The result is a seamless deception that bypasses detection.

The weaknesses are multifaceted:

- Facial Recognition Vulnerabilities: AI-generated facial animations can simulate real expressions, making it difficult for legacy face recognition door access system frameworks to distinguish between live and artificial inputs.

- Voice Spoofing: Voice deepfakes can replicate tone, inflection, and speech rhythm with near-perfect precision. Without deepfake detection mechanisms, these imitations can easily mislead voice authentication services.

- Fingerprint and Iris Duplication: High-resolution imaging tools can replicate unique biometric patterns. When these forgeries are paired with weak scanning technology, even a fingerprint scanner door access system may grant entry to unauthorised users.

Many of these weaknesses arise from a lack of liveness detection. Legacy systems check “what” is presented but not “how” it behaves. They can confirm a match but cannot confirm if that match belongs to a living person. This absence of behavioural context creates an exploitable gap that identity threats can manipulate.

Equally concerning is the use of synthetic data in bypass attempts. Fraudsters can train machine learning models to predict biometric responses, creating fabricated templates that mimic the characteristics of real individuals. Such forgeries are invisible to systems that rely solely on pattern recognition.

To address these vulnerabilities, developers and security architects must adopt an AI-centric approach. Artificial intelligence in security enables the recognition of subtle inconsistencies that betray deepfakes—minute lighting errors, pixel irregularities, or unnatural skin texture. These cues, invisible to the human eye, provide the key to proactive deepfake detection.

In this new landscape, identity threats are not static—they learn, adapt, and evolve. Consequently, biometric defence systems must do the same.

Part 3: Adaptive Biometrics and Countermeasures

3.1 Advanced Techniques in Biometric Anti-Spoofing

As fraudsters refine their techniques, the countermeasures must evolve faster. Modern anti-spoofing solutions now integrate behavioural analysis, 3D imaging, and machine learning algorithms to validate authenticity in real time.

Liveness detection has emerged as one of the most effective safeguards. It evaluates involuntary micro-movements such as pupil dilation, eye blinks, or facial temperature gradients to determine whether the presented subject is alive. When integrated with a biometric system, these mechanisms make it nearly impossible for deepfake forgeries to succeed.

Another important layer is the challenge-response mechanism. By prompting users to perform random actions, such as turning their heads or repeating spoken phrases, the biometric door access system verifies real-time responsiveness. A synthetic video or voice clip cannot replicate spontaneous behaviour under these conditions.

3D and depth sensing technologies also play a crucial role. Unlike two-dimensional cameras, 3D sensors capture distance, depth, and volumetric data to detect flat or simulated sources. When paired with AI, this approach allows artificial intelligence in security frameworks to instantly reject fraudulent attempts.

Beyond physical traits, behavioural biometrics are redefining the notion of identity. Instead of relying on fixed patterns, they analyse dynamic behaviours such as typing cadence, touchscreen pressure, and navigation habits. These signals form an additional layer of verification that is far more difficult to replicate, even with identity threats built around synthetic data.

The future of anti-spoofing lies in the integration of these systems. By combining multiple factors, such as facial recognition, fingerprint scans, and behavioural cues, organisations can build multi-modal frameworks that are resilient to evolving identity threats.

At leading security system providers like iDLink Systems, such layered integration is already shaping practical applications. For instance, a corporate facility might use both a face recognition and fingerprint door access system for dual-factor verification. The combination reduces dependency on any single trait, significantly lowering the probability of successful spoofing attempts.

As identity threats become more intricate, organisations must recognise that there is no single failproof solution. Instead, resilience emerges through intelligent layering—where machine learning, depth sensing, and behavioural analysis converge to outpace deception.

3.2 Industry Solutions and Standards in Development

The growing threat of biometric manipulation has accelerated global collaboration among industry leaders, research bodies, and policy regulators. From new testing standards to AI-assisted fraud analytics, the security sector is collectively reshaping how biometric systems defend against deepfakes and synthetic impersonation.

International standards, such as those developed by ISO/IEC and NIST, are setting benchmarks for biometric spoof detection. These frameworks define how systems should be tested for resilience, ensuring that products like a fingerprint scanner door access system meet specific anti-fraud performance levels. Compliance with such standards not only ensures technical strength but also builds public trust—a critical factor in any security deployment.

At the same time, machine learning is being refined for continuous threat recognition. Through artificial intelligence in security, algorithms can identify emerging spoofing trends and automatically update their defences. These systems learn from every attempt, whether legitimate or fraudulent, enhancing their accuracy with each interaction.

AI-enhanced fraud detection also makes use of deepfake detection algorithms that cross-reference known identity templates with real-time behaviour. These mechanisms do not rely solely on stored biometric data but observe contextual variables like environment lighting, reflection patterns, or device interaction timing. The goal is to verify authenticity dynamically rather than statically.

One of the most promising advances in the fight against identity threats is multi-modal biometrics. This approach combines several identification methods, such as facial geometry, fingerprint texture, and voice signature, into a single unified model. By doing so, it reduces the probability that all modalities could be successfully spoofed at once.

Emerging solutions also involve decentralised identity systems supported by encryption and blockchain technology. These platforms eliminate the need to store biometric data in a single location, making it much harder for attackers to obtain large data sets for synthetic data creation.

As the field matures, the convergence of AI, hardware, and ethical governance will define the next generation of security. The integration of artificial intelligence in security with stringent certification standards offers a path forward—one that acknowledges the adaptability of identity threats while continually reinforcing trust in technology.

Frequently Asked Questions

1. Can deepfakes really fool biometric authentication systems and solutions?

Yes. Deepfakes now replicate facial features, voice patterns, and behavioural cues with high accuracy, which means poorly defended systems can be deceived. Without robust liveness checks, contextual analysis, and multi-modal verification, traditional biometrics may accept synthetic inputs as genuine. The most reliable defence is to treat deepfakes as active identity threats, then layer deepfake detection, behavioural analytics, and ongoing model updates to prevent successful spoofing.

2. What is the difference between liveness detection and traditional biometric verification?

Traditional verification compares a presented trait, such as a face, fingerprint, or voice, against a stored template to confirm a match. Liveness detection adds a further step, verifying that the trait comes from a real, present person rather than an image, recording, mask, or AI-generated artefact. Techniques include micro-movement analysis, depth sensing, texture and reflection checks, and challenge-response prompts. This additional safeguard directly addresses modern identity threats that rely on synthetic media and replay attacks.

3. How can organisations future-proof their biometric systems against emerging threats?

Adopt a layered, adaptive strategy designed for evolving identity threats:

- Integrate AI-based fraud analytics, anomaly scoring, and real-time behavioural monitoring.

- Use multi-modal biometrics to reduce reliance on any single trait.

- Align with evolving standards and testing regimes, such as ISO/IEC and NIST guidelines, and schedule regular system re-certification.

- Prioritise strong liveness detection, secure template storage, and end-to-end encryption of biometric data at rest and in transit.

Deepfakes and synthetic identities represent a turning point for the global security landscape. They blur the line between authenticity and illusion, forcing every organisation to reconsider the reliability of its biometric defences. Traditional frameworks built on static recognition can no longer stand alone. The emergence of AI-driven deception has changed the rules of verification, making adaptability the new foundation of trust.

The fight against identity threats is no longer about building stronger walls, but about creating smarter systems. A secure door access system today must not only recognise who is entering but also confirm that the individual is genuine and present in real time. Liveness detection, behavioural monitoring, and continuous AI analysis are transforming how authenticity is measured and verified.

By combining deepfake detection, GANs analysis, and synthetic data training, the industry is moving toward self-learning authentication models. These systems evolve alongside the very threats they defend against, detecting anomalies before they escalate into breaches. The integration of artificial intelligence in security allows each biometric system to adapt intelligently to new forms of deception, ensuring that protection is both dynamic and durable.

Enterprises that rely on biometric access technologies must now take a proactive stance. The risks posed by modern identity threats can no longer be mitigated through conventional methods alone. Instead, they require a holistic approach, one that unites technology, policy, and continuous innovation.

The future of security depends on collective vigilance. As synthetic manipulation becomes more accessible, the only effective response is to stay ahead of it through education, research, and rapid adaptation. By investing in smarter authentication infrastructures, organisations can transform uncertainty into resilience.

Here at iDLink Systems, we understand the modern security challenges that organisations face, and our access systems are designed to tackle a new wave of identity threats, keeping vital assets and information safe and secure.

Is your current access control ready for the next generation of deepfakes? Check out our comprehensive range of AI-powered biometric solutions, built to safeguard against the evolving landscape of identity threats with precision, intelligence, and trust.